Optimising for High Latency Environments

CSS Wizardry

SEPTEMBER 16, 2024

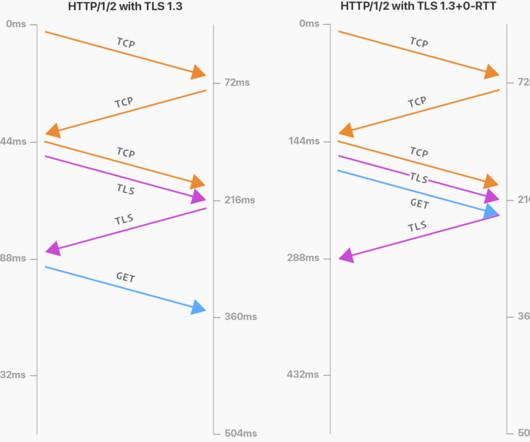

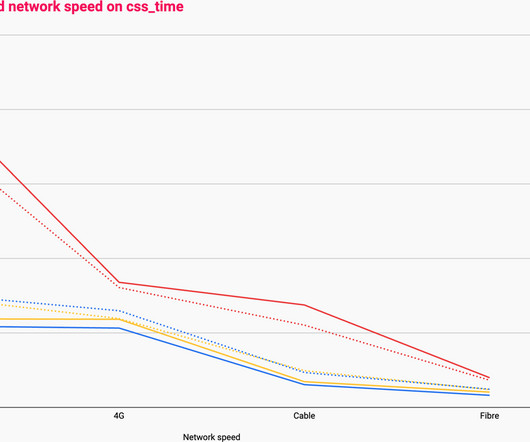

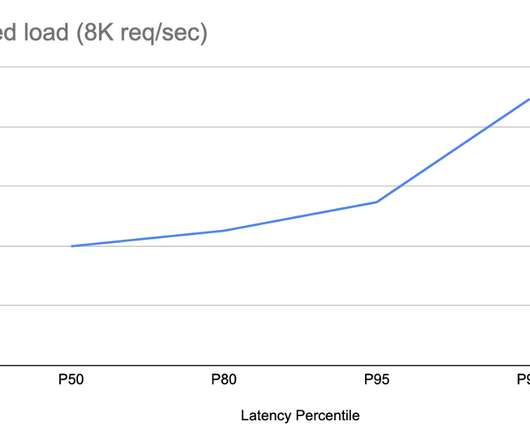

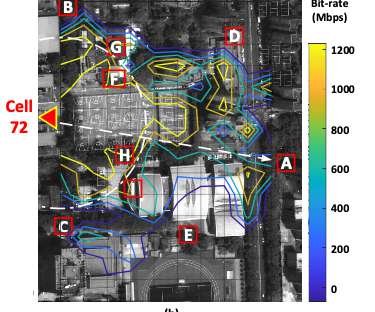

This gives fascinating insights into the network topography of our visitors, and how much we might be impacted by high latency regions. Round-trip-time (RTT) is basically a measure of latency—how long did it take to get from one endpoint to another and back again? What is RTT? RTT isn’t a you-thing, it’s a them-thing.

Let's personalize your content