Netflix’s Distributed Counter Abstraction

The Netflix TechBlog

NOVEMBER 12, 2024

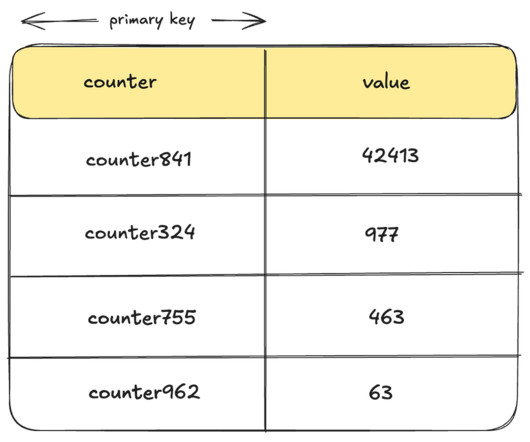

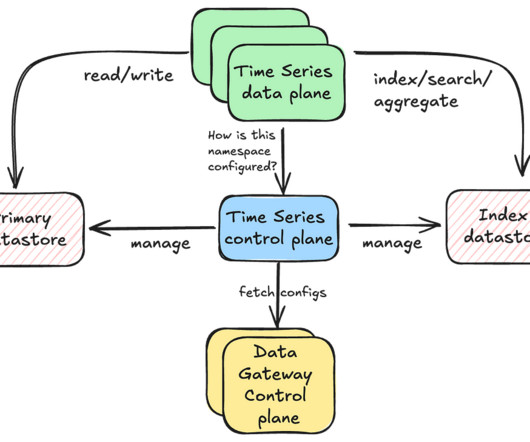

By: Rajiv Shringi , Oleksii Tkachuk , Kartik Sathyanarayanan Introduction In our previous blog post, we introduced Netflix’s TimeSeries Abstraction , a distributed service designed to store and query large volumes of temporal event data with low millisecond latencies. Today, we’re excited to present the Distributed Counter Abstraction.

Let's personalize your content