Better dashboarding with Dynatrace Davis AI: Instant meaningful insights

Dynatrace

JANUARY 21, 2025

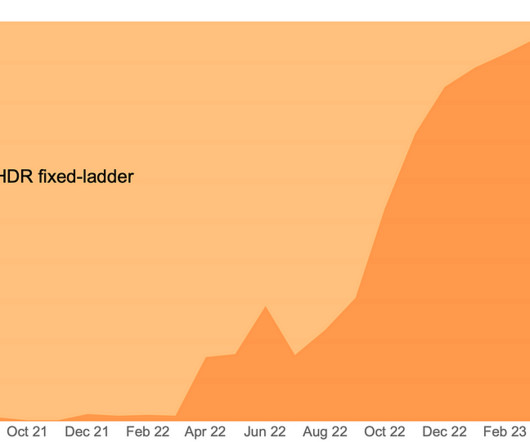

The market is saturated with tools for building eye-catching dashboards, but ultimately, it comes down to interpreting the presented information. For example, if you’re monitoring network traffic and the average over the past 7 days is 500 Mbps, the threshold will adapt to this baseline.

Let's personalize your content