Efficient Multimodal Data Processing: A Technical Deep Dive

DZone

FEBRUARY 27, 2025

Handling multimodal data spanning text, images, videos, and sensor inputs requires resilient architecture to manage the diversity of formats and scale.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

DZone

FEBRUARY 27, 2025

Handling multimodal data spanning text, images, videos, and sensor inputs requires resilient architecture to manage the diversity of formats and scale.

The Netflix TechBlog

NOVEMBER 17, 2022

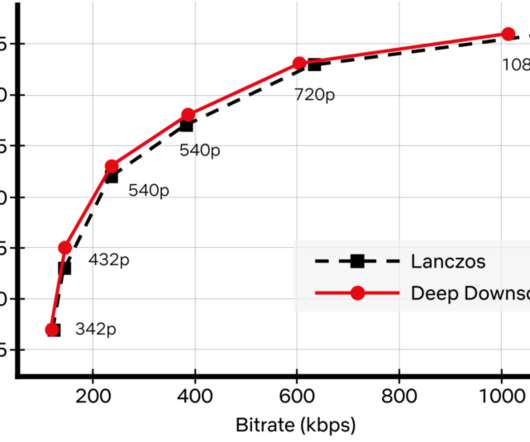

Bampis , Li-Heng Chen and Zhi Li When you are binge-watching the latest season of Stranger Things or Ozark, we strive to deliver the best possible video quality to your eyes. To do so, we continuously push the boundaries of streaming video quality and leverage the best video technologies.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

The Netflix TechBlog

JANUARY 10, 2024

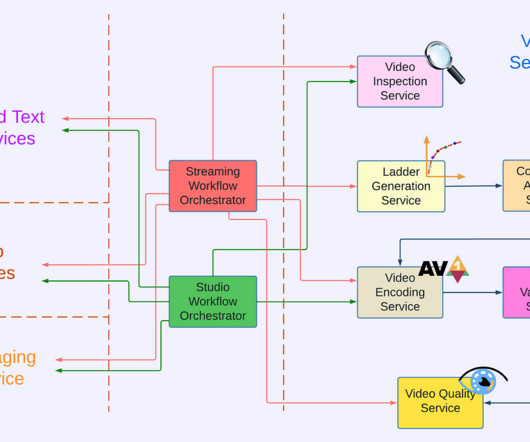

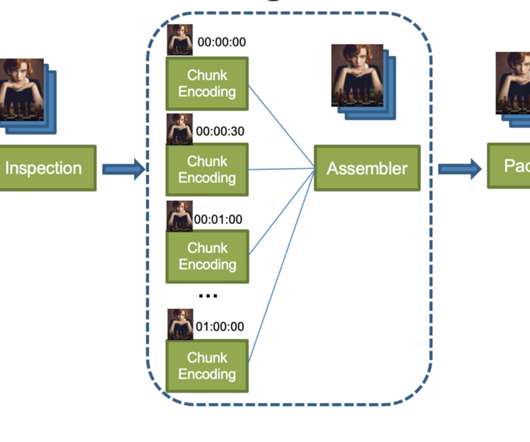

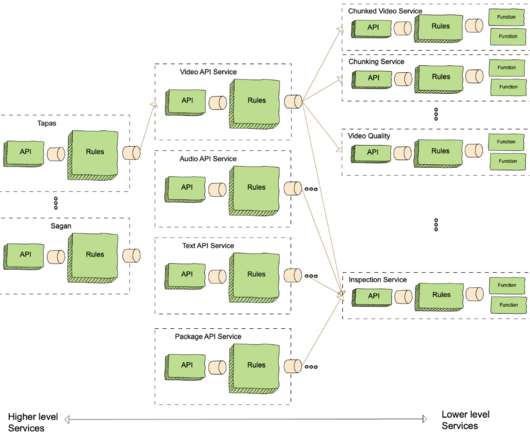

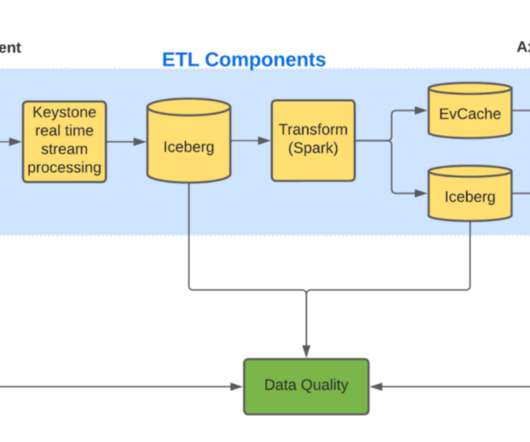

The Netflix video processing pipeline went live with the launch of our streaming service in 2007. This architecture shift greatly reduced the processing latency and increased system resiliency. For example, in Reloaded the video quality calculation was implemented inside the video encoder module.

Dynatrace

JANUARY 26, 2021

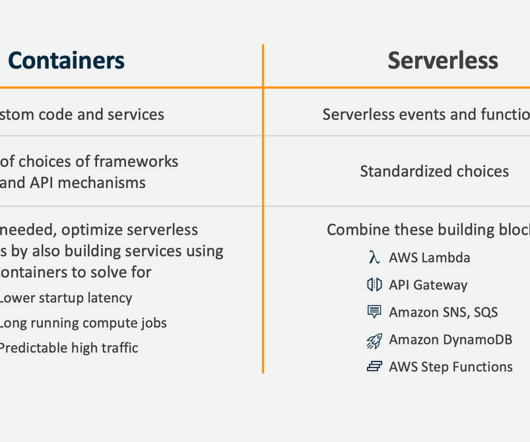

This allows teams to sidestep much of the cost and time associated with managing hardware, platforms, and operating systems on-premises, while also gaining the flexibility to scale rapidly and efficiently. REST APIs, authentication, databases, email, and video processing all have a home on serverless platforms.

The Netflix TechBlog

MARCH 28, 2025

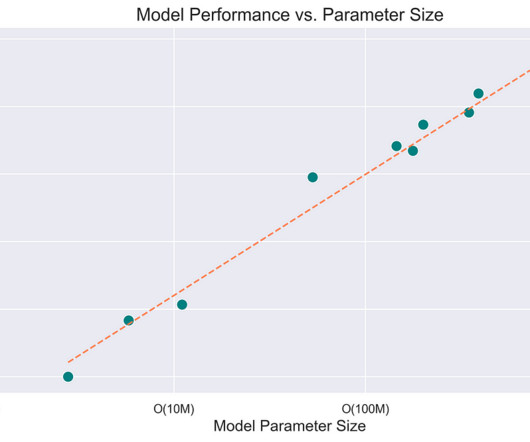

Yet, many are confined to a brief temporal window due to constraints in serving latency or training costs. These insights have shaped the design of our foundation model, enabling a transition from maintaining numerous small, specialized models to building a scalable, efficient system.

The Netflix TechBlog

JULY 12, 2019

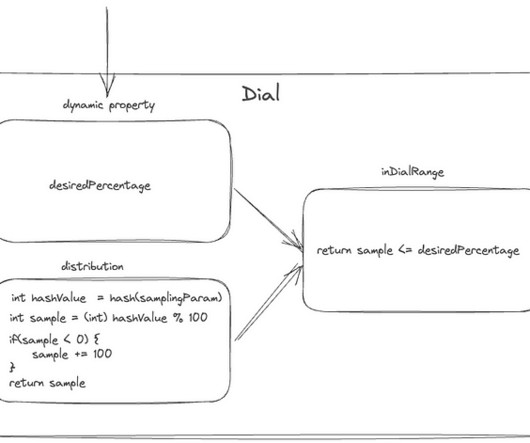

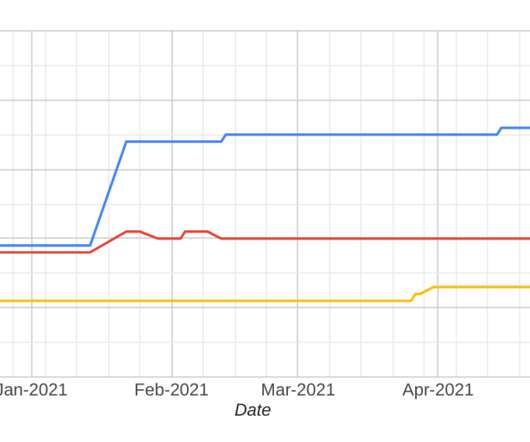

Gatekeeper is the system at Netflix responsible for evaluating the “liveness” of videos and assets on the site. Gatekeeper accomplishes its prescribed task by aggregating data from multiple upstream systems, applying some business logic, then producing an output detailing the status of each video in each country.

The Netflix TechBlog

NOVEMBER 2, 2021

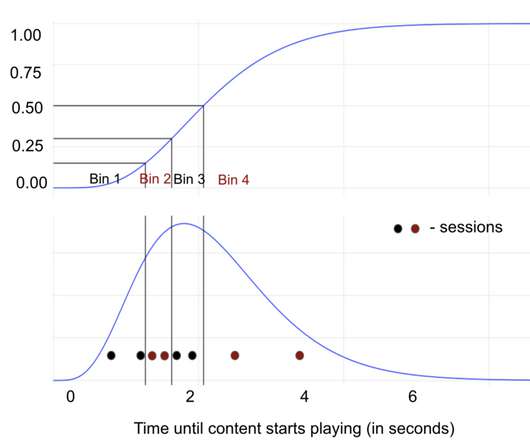

Moorthy and Zhi Li Introduction Measuring video quality at scale is an essential component of the Netflix streaming pipeline. Perceptual quality measurements are used to drive video encoding optimizations , perform video codec comparisons , carry out A/B testing and optimize streaming QoE decisions to mention a few.

The Netflix TechBlog

SEPTEMBER 29, 2022

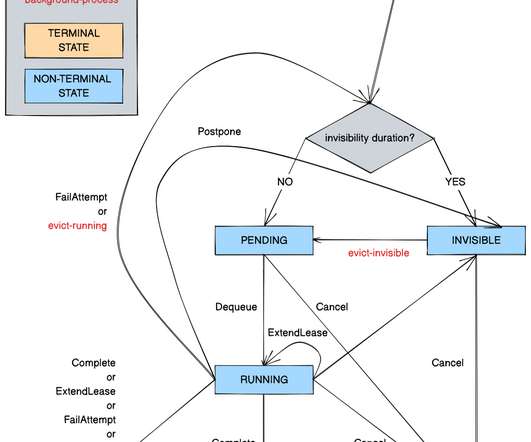

Timestone: Netflix’s High-Throughput, Low-Latency Priority Queueing System with Built-in Support for Non-Parallelizable Workloads by Kostas Christidis Introduction Timestone is a high-throughput, low-latency priority queueing system we built in-house to support the needs of Cosmos , our media encoding platform. Over the past 2.5

The Netflix TechBlog

SEPTEMBER 24, 2021

After content ingestion, inspection and encoding, the packaging step encapsulates encoded video and audio in codec agnostic container formats and provides features such as audio video synchronization, random access and DRM protection. Uploading and downloading data always come with a penalty, namely latency.

The Netflix TechBlog

SEPTEMBER 2, 2020

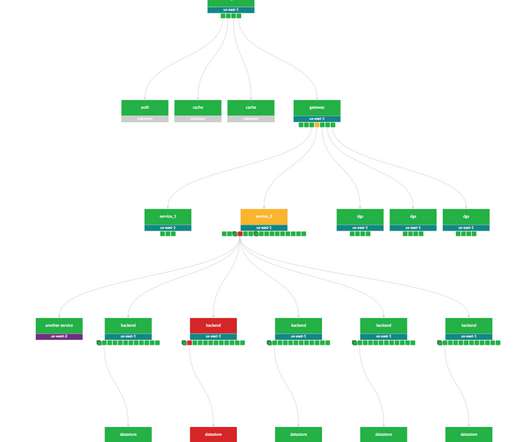

Edgar helps Netflix teams troubleshoot distributed systems efficiently with the help of a summarized presentation of request tracing, logs, analysis, and metadata. Telltale provides Edgar with latency benchmarks that indicate if the individual trace’s latency is abnormal for this given service. What is Edgar?

Dynatrace

JUNE 1, 2023

Note : you might hear the term latency used instead of response time. Both latency and response time are critical to ensure reliability. Latency typically refers to the time it takes for a single request to travel from its source to its destination. Latency primarily focuses on the time spent in transit.

Adrian Cockcroft

MAY 6, 2023

This is only one of many microservices that make up the Prime Video application. A real-time user experience analytics engine for live video, that looked at all users rather than a subsample. His first edition in 2015 was foundational, and he updated it in 2021 with a second edition. Finally, what were they building?

The Netflix TechBlog

MARCH 1, 2021

It supports both high throughput services that consume hundreds of thousands of CPUs at a time, and latency-sensitive workloads where humans are waiting for the results of a computation. In the diagram below of a typical Cosmos service, clients send requests to a Video encoder service API layer. debian packages).

The Netflix TechBlog

MARCH 5, 2024

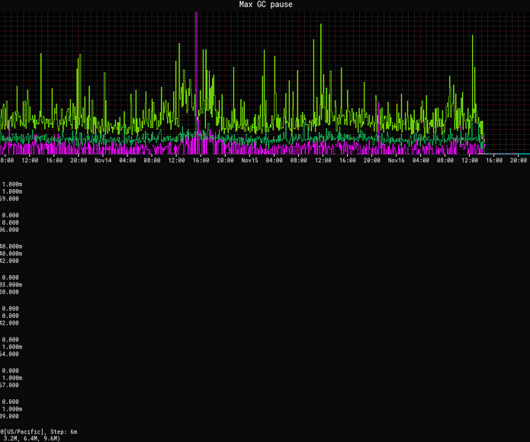

More than half of our critical streaming video services are now running on JDK 21 with Generational ZGC, so it’s a good time to talk about our experience and the benefits we’ve seen. Reduced tail latencies In both our GRPC and DGS Framework services, GC pauses are a significant source of tail latencies.

The Netflix TechBlog

OCTOBER 8, 2024

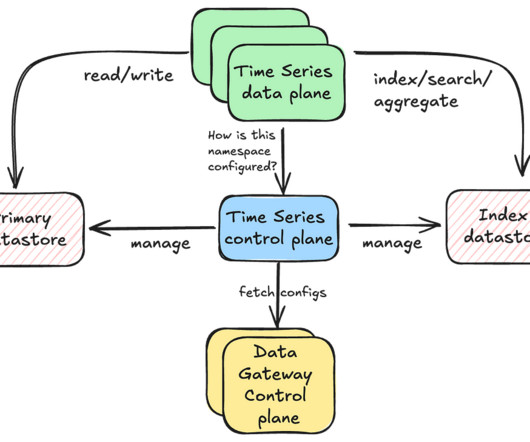

Rajiv Shringi Vinay Chella Kaidan Fullerton Oleksii Tkachuk Joey Lynch Introduction As Netflix continues to expand and diversify into various sectors like Video on Demand and Gaming , the ability to ingest and store vast amounts of temporal data — often reaching petabytes — with millisecond access latency has become increasingly vital.

The Netflix TechBlog

OCTOBER 27, 2020

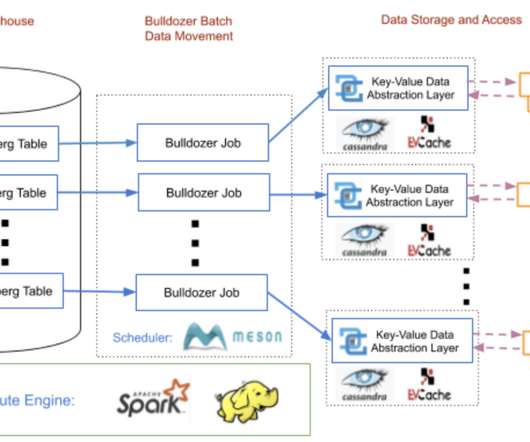

Data scientists and engineers collect this data from our subscribers and videos, and implement data analytics models to discover customer behaviour with the goal of maximizing user joy. The data warehouse is not designed to serve point requests from microservices with low latency. Moving data with Bulldozer at Netflix.

The Netflix TechBlog

SEPTEMBER 8, 2020

As an example, to render the screen shown here, the app sends a query that looks like this: paths: ["videos", 80154610, "detail"] A path starts from a root object , and is followed by a sequence of keys that we want to retrieve the data for. Instead, it is part of a different path : [videos, <id>, similars].

The Netflix TechBlog

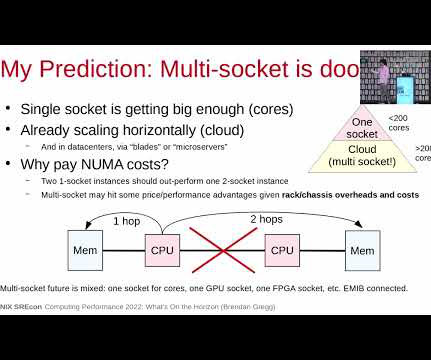

JUNE 4, 2019

Because microprocessors are so fast, computer architecture design has evolved towards adding various levels of caching between compute units and the main memory, in order to hide the latency of bringing the bits to the brains. This avoids thrashing caches too much for B and evens out the pressure on the L3 caches of the machine.

Dynatrace

JUNE 1, 2023

Note : you might hear the term latency used instead of response time. Both latency and response time are critical to ensure reliability. Latency typically refers to the time it takes for a single request to travel from its source to its destination. Latency primarily focuses on the time spent in transit.

Dynatrace

JANUARY 13, 2022

Analyzing a clinician’s clickstream when using an electronic medical record system to better improve the efficiency of data entry. Providing insight into the service latency to help developers identify poorly performing code. Tracking users’ paths through the conversion funnel and using that data for attributing revenue.

The Netflix TechBlog

JUNE 13, 2023

By collecting and analyzing key performance metrics of the service over time, we can assess the impact of the new changes and determine if they meet the availability, latency, and performance requirements. One can perform this comparison live on the request path or offline based on the latency requirements of the particular use case.

The Netflix TechBlog

APRIL 26, 2022

Figure 1: Netflix ML Architecture Fact: A fact is data about our members or videos. An example of data about members is the video they had watched or added to their My List. An example of video data is video metadata, like the length of a video. Time is a critical component of Axion?—?When

The Netflix TechBlog

OCTOBER 19, 2020

Investigating a video streaming failure consists of inspecting all aspects of a member account. If we had an ID for each streaming session then distributed tracing could easily reconstruct session failure by providing service topology, retry and error tags, and latency measurements for all service calls. Storage: don’t break the bank!

The Netflix TechBlog

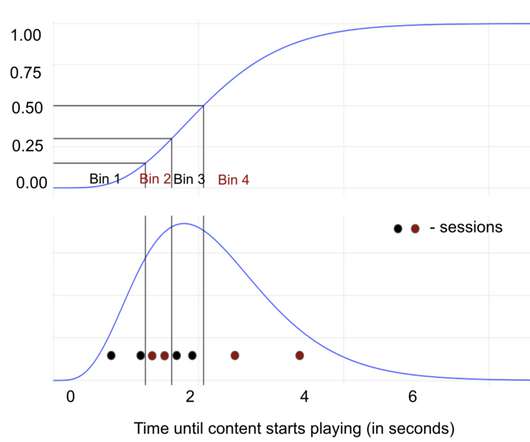

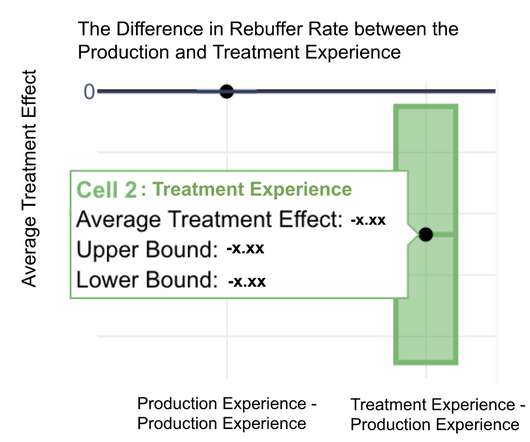

OCTOBER 26, 2021

Say at Netflix that we run a test that aims to reduce some measure of latency, such as the delay between a member pressing play and video playback commencing. As a result, if the test treatment results in a small reduction in the latency metric, it’s hard to successfully identify?

The Netflix TechBlog

DECEMBER 2, 2019

To do this, we have teams of experts that develop more efficient video and audio encodes , refine the adaptive streaming algorithm , and optimize content placement on the distributed servers that host the shows and movies that you watch. Often times simply moving the mean or median of a metric is not the experimenter’s goal.

The Netflix TechBlog

DECEMBER 2, 2019

To do this, we have teams of experts that develop more efficient video and audio encodes , refine the adaptive streaming algorithm , and optimize content placement on the distributed servers that host the shows and movies that you watch. Often times simply moving the mean or median of a metric is not the experimenter’s goal.

VoltDB

FEBRUARY 29, 2024

In this fast-paced ecosystem, two vital elements determine the efficiency of this traffic: latency and throughput. LATENCY: THE WAITING GAME Latency is like the time you spend waiting in line at your local coffee shop. All these moments combined represent latency – the time it takes for your order to reach your hands.

The Netflix TechBlog

DECEMBER 2, 2019

To do this, we have teams of experts that develop more efficient video and audio encodes , refine the adaptive streaming algorithm , and optimize content placement on the distributed servers that host the shows and movies that you watch. Often times simply moving the mean or median of a metric is not the experimenter’s goal.

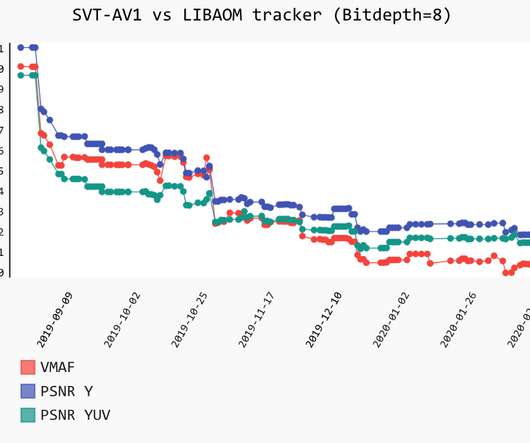

The Netflix TechBlog

MARCH 13, 2020

The teams have been working closely on SVT-AV1 development, discussing architectural decisions, implementing new tools, and improving compression efficiency. The SVT-AV1 encoder supports all AV1 tools which contribute to compression efficiency. As seen below, SVT-AV1 demonstrates 16.5%

IO River

NOVEMBER 2, 2023

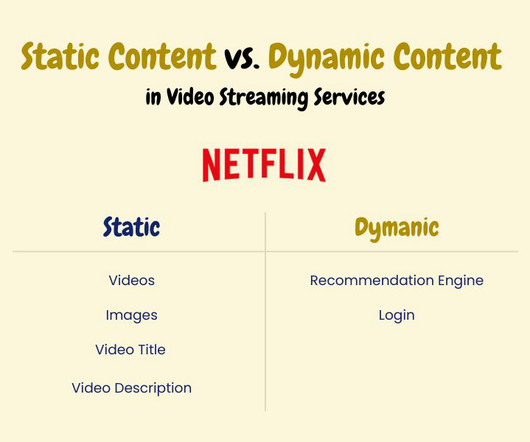

Now, with viewers all over the world expecting flawless and high-definition streaming, video providers have their work cut out for them. What Comprises Video Streaming - Traffic CharacteristicsWith the emphasis on a high-quality streaming experience, the optimization starts from the very core. Only overflow will await you.

IO River

NOVEMBER 2, 2023

Now, with viewers all over the world expecting flawless and high-definition streaming, video providers have their work cut out for them. â€What Comprises Video Streaming - Traffic CharacteristicsWith the emphasis on a high-quality streaming experience, the optimization starts from the very core. Only overflow will await you.Â

All Things Distributed

AUGUST 20, 2015

Developers need efficient methods to store, traverse, and query these relationships. Social media apps navigate relationships between friends, photos, videos, pages, and followers. In supply chain management, connections between airports, warehouses, and retail aisles are critical for cost and time optimization. Enter graph databases.

Brendan Gregg

FEBRUARY 28, 2023

The video is now on [YouTube]: The slides are [online] and as a [PDF]: first prev next last / permalink/zoom In Q&A I was asked about CXL (compute express link) which was fortunate as I had planned to cover it and then forgot, so the question let me talk about it (although Q&A is missing from the video). Ford, et al., “TCP

Alex Russell

APRIL 29, 2021

Next-generation video codecs, supported in many modern chips, but also a licensing minefield. Necessary for building competitive video experiences, including messaging and videoconferencing. Fundamentally enabling for video creation apps. Without it, video recordings must fit in memory, leading to crashes. getUserMedia().

All Things Distributed

OCTOBER 12, 2016

Three years ago, as part of our AWS Fast Data journey we introduced Amazon ElastiCache for Redis , a fully managed in-memory data store that operates at sub-millisecond latency. ElastiCache for Redis Multi-AZ capability is built to handle any failover case for Redis Cluster with robustness and efficiency. Building upon Redis.

All Things Distributed

MARCH 11, 2016

AWS needed to be very conscious as a service provider about our costs so that we could afford to offer our services to customers and identify areas where we could drive operational efficiencies to cut costs further, and then offer those savings back to our customers in the form of lower prices. The importance of the network. No gatekeepers.

The Morning Paper

SEPTEMBER 10, 2019

When each of those use cases is powered by a dedicated back-end, investments in better performance, improved scalability and efficiency etc. That’s hard for many reasons, including the differing trade-offs between throughput and latency that need to be made across the use cases. are divided. Reporting and dashboarding use cases (e.g.

All Things Distributed

SEPTEMBER 5, 2013

Meanwhile, mobile app developers have shown that they care a lot about getting to market quickly, the ability to easily scale their app from 100 users to 1 million users on day 1, and the extreme low latency database performance that is crucial to ensure a great end-user experience. Calculate great circle distances and perform spherical math.

All Things Distributed

DECEMBER 12, 2013

Moreover, a GSI''s performance is designed to meet DynamoDB''s single digit millisecond latency - you can add items to a Users table for a gaming app with tens of millions of users with UserId as the primary key, but retrieve them based on their home city, with no reduction in query performance. Efficient Queries.

ACM Sigarch

DECEMBER 6, 2018

Each of these categories opens up challenging problems in AI/visual algorithms, high-density computing, bandwidth/latency, distributed systems. One such example is activity recognition in motion video (such as LRCN , Convnets ) which may entail running combinations of both convolutional as well as recurrent neural networks simultaneously.

Scalegrid

JUNE 7, 2024

While ensuring that messages are durable brings several advantages, it’s important to note that it doesn’t significantly degrade performance regarding throughput or latency. Indeed, RabbitMQ can process streaming data in real time and thus can be apt for applications involving constant, voluminous data streams, such as video platforms.

Dotcom-Montior

DECEMBER 8, 2021

By ITIL definition, the service desk may take the form of incident resolution or service requests, but whatever the case, the primary goal of the service desk to provide quick and efficient service. This helps to improve efficiency and ensures that information is consistent, up-to-date, and available. Problem Management.

Smashing Magazine

SEPTEMBER 28, 2022

Continue reading below ↓ Meet Smashing Online Workshops on front-end & UX , with practical takeaways, live sessions, video recordings and a friendly Q&A. Efficient use of a client’s resources and bandwidth can greatly improve your application’s performance. More after jump! Jump to online workshops ?. Caching Schemes.

VoltDB

JUNE 1, 2017

Latency Optimizers” – need support for very large federated deployments. 5G expects a latency of 1ms , which considering that the speed of light means the data center can’t be more than 186 miles away, or 93 miles for a round trip, assuming an instant response. Watch the Video. Everything else is just Horns and Valkyries ….

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content