API Design Principles for Optimal Performance and Scalability

DZone

JUNE 22, 2023

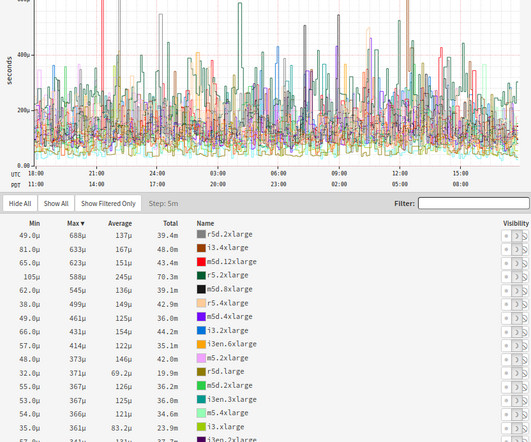

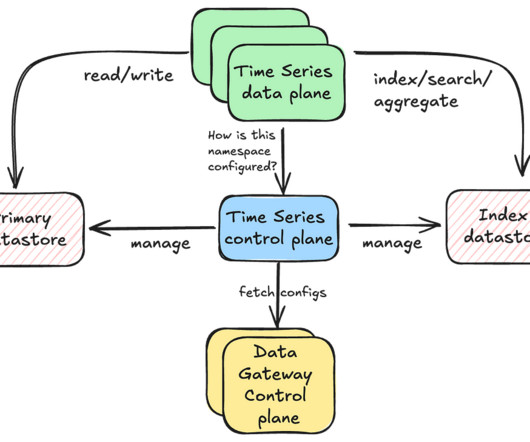

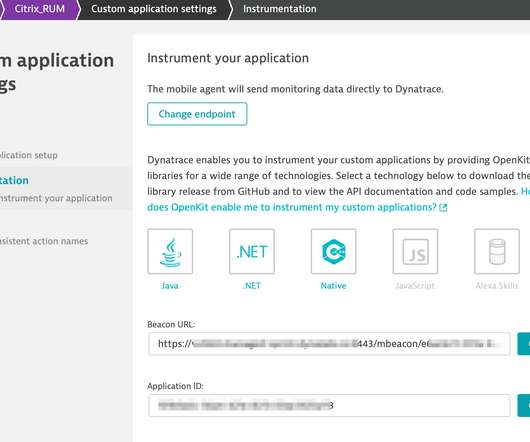

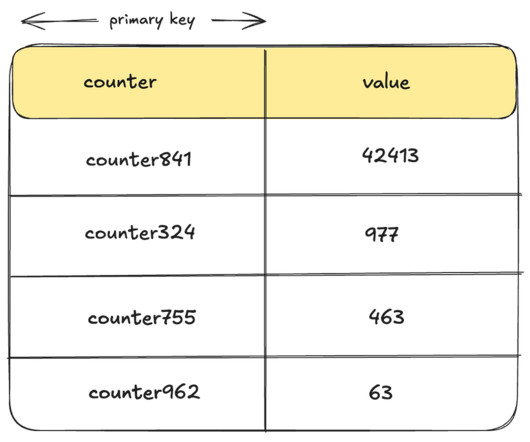

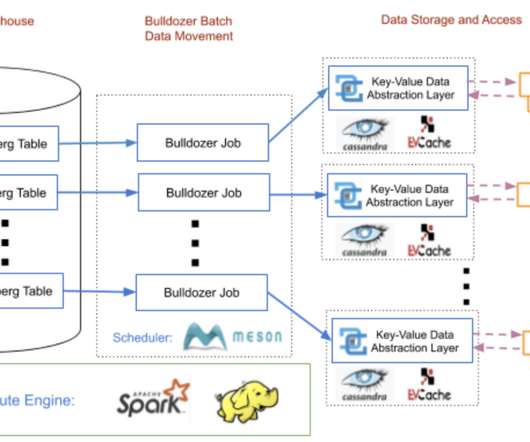

The goal is to help developers, technical managers, and business owners understand the importance of API performance optimization and how they can improve the speed, scalability, and reliability of their APIs. API performance optimization is the process of improving the speed, scalability, and reliability of APIs.

Let's personalize your content