Java Is Greener on Arm

DZone

OCTOBER 30, 2024

Hardware and software are evolving in parallel, and combining the best of modern software development with the latest Arm hardware can yield impressive performance, cost, and efficiency results.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

DZone

OCTOBER 30, 2024

Hardware and software are evolving in parallel, and combining the best of modern software development with the latest Arm hardware can yield impressive performance, cost, and efficiency results.

ACM Sigarch

APRIL 2, 2025

To create a CPU core that can execute a large number of instructions in parallel, it is necessary to improve both the architecturewhich includes the overall CPU design and the instruction set architecture (ISA) designand the microarchitecture, which refers to the hardware design that optimizes instruction execution.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

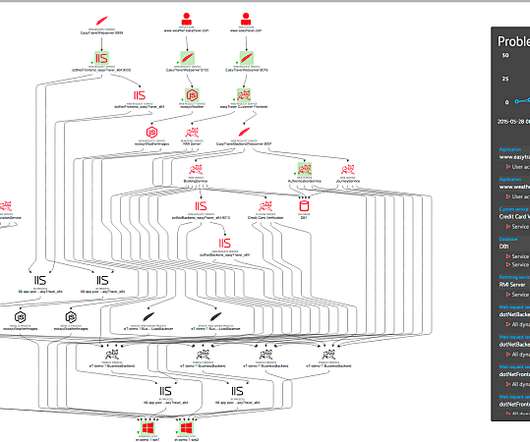

Dynatrace

OCTOBER 23, 2023

It enables multiple operating systems to run simultaneously on the same physical hardware and integrates closely with Windows-hosted services. This leads to a more efficient and streamlined experience for users. Microsoft Hyper-V is a virtualization platform that manages virtual machines (VMs) on Windows-based systems.

Dynatrace

JANUARY 26, 2021

This allows teams to sidestep much of the cost and time associated with managing hardware, platforms, and operating systems on-premises, while also gaining the flexibility to scale rapidly and efficiently. In a serverless architecture, applications are distributed to meet demand and scale requirements efficiently.

Dynatrace

APRIL 15, 2024

Government agencies aim to meet their citizens’ needs as efficiently and effectively as possible to ensure maximum impact from every tax dollar invested. Every hardware, software, cloud infrastructure component, container, open source tool, and microservice generates records of every activity within modern environments.

DZone

DECEMBER 20, 2023

This ground-breaking method enables users to run multiple virtual machines on a single physical server, increasing flexibility, lowering hardware costs, and improving efficiency. Mini PCs have become effective virtualization tools in this setting, providing a portable yet effective solution for a variety of applications.

DZone

AUGUST 16, 2019

Application scalability is the potential of an application to grow in time, being able to efficiently handle more and more requests per minute (RPM). It’s not just a simple tweak you can turn on/off; it’s a long-time process that touches almost every single item in your stack, including both hardware and software sides of the system.

DZone

AUGUST 7, 2023

While some distributions are aimed at experienced Linux users, there are distributions that cater to the needs of beginners or users with older hardware. Lightweight Linux distributions are specifically designed to be low-resource, efficient, and quick, offering users a smooth and responsive user experience.

DZone

SEPTEMBER 16, 2024

Efficient database scaling becomes crucial to maintain performance, ensure reliability, and manage large volumes of data. Scaling a database effectively involves a combination of strategies that optimize both hardware and software resources to handle increasing loads.

DZone

JANUARY 23, 2022

Hardware - servers/storage hardware/software faults such as disk failure, disk full, other hardware failures, servers running out of allocated resources, server software behaving abnormally, intra DC network connectivity issues, etc. Redundancy in power, network, cooling systems, and possibly everything else relevant.

DZone

OCTOBER 6, 2021

Differences in OS, screen size, screen density, and hardware can all affect how an app behaves and impact the user experience. In order to ship new updates of your app with confidence, you should efficiently analyze app performance during development to identify issues before they reach the end-users.

DZone

MAY 1, 2023

CPU isolation and efficient system management are critical for any application which requires low-latency and high-performance computing. In modern production environments, there are numerous hardware and software hooks that can be adjusted to improve latency and throughput.

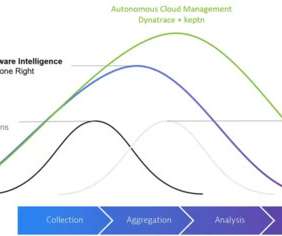

Dynatrace

MARCH 20, 2024

In this article, we’ll explore these challenges in detail and introduce Keptn, an open source project that addresses these issues, enhancing Kubernetes observability for smoother and more efficient deployments. Vulnerabilities or hardware failures can disrupt deployments and compromise application security.

Scalegrid

FEBRUARY 6, 2025

Kafka scales efficiently for large data workloads, while RabbitMQ provides strong message durability and precise control over message delivery. Message brokers handle validation, routing, storage, and delivery, ensuring efficient and reliable communication. This allows Kafka clusters to handle high-throughput workloads efficiently.

Scalegrid

JANUARY 5, 2024

Enhanced data security, better data integrity, and efficient access to information. Despite initial investment costs, DBMS presents long-term savings and improved efficiency through automated processes, efficient query optimizations, and scalability, contributing to enhanced decision-making and end-user productivity.

Scalegrid

FEBRUARY 24, 2025

This guide will cover how to distribute workloads across multiple nodes, set up efficient clustering, and implement robust load-balancing techniques. This leadership ensures that messages are managed efficiently, providing the fastest fail-over among replicated queue types.

Dynatrace

JULY 24, 2024

By leveraging Dynatrace observability on Red Hat OpenShift running on Linux, you can accelerate modernization to hybrid cloud and increase operational efficiencies with greater visibility across the full stack from hardware through application processes.

DZone

JULY 10, 2024

Viking Enterprise Solutions (VES) , a division of Sanmina Corporation, stands at the forefront of addressing these challenges with its innovative hardware and software solutions. As a product division of Sanmina, a $9 billion public company, VES leverages decades of manufacturing expertise to deliver cutting-edge data center solutions.

Dynatrace

NOVEMBER 3, 2022

The containerization craze has continued for enterprises, with benefits such as portability, efficiency, and scalability. In FaaS environments, providers manage all the hardware. Alternatively, in a CaaS model, businesses can directly access and manage containers on hardware. million in 2020. CaaS vs. FaaS.

Scalegrid

MAY 13, 2020

Greenplum Database is an open-source , hardware-agnostic MPP database for analytics, based on PostgreSQL and developed by Pivotal who was later acquired by VMware. Greenplum’s high performance eliminates the challenge most RDBMS have scaling to petabtye levels of data, as they are able to scale linearly to efficiently process data.

Dynatrace

JULY 11, 2024

They’ve gone from just maintaining their organization’s hardware and software to becoming an essential function for meeting strategic business objectives. Today, IT services have a direct impact on almost every key business performance indicator, from revenue and conversions to customer satisfaction and operational efficiency.

Dynatrace

APRIL 5, 2021

The 2014 launch of AWS Lambda marked a milestone in how organizations use cloud services to deliver their applications more efficiently, by running functions at the edge of the cloud without the cost and operational overhead of on-premises servers. Dynatrace news. What is AWS Lambda? How does AWS Lambda work?

Dynatrace

JULY 8, 2019

When we wanted to add a location, we had to ship hardware and get someone to install that hardware in a rack with power and network. Hardware was outdated. Fixed hardware is a single point of failure – even when we had redundant machines. Keep hardware and browsers updated at all times. Sound easy?

DZone

JANUARY 30, 2024

This article explores the role of CMDB in empowering IT infrastructure management, enhancing operational efficiency, and fostering strategic decision-making. These CIs include hardware, software, network devices, and other elements critical to an organization's IT operations.

DZone

OCTOBER 7, 2024

As deep learning models evolve, their growing complexity demands high-performance GPUs to ensure efficient inference serving. Many organizations rely on cloud services like AWS, Azure, or GCP for these GPU-powered workloads, but a growing number of businesses are opting to build their own in-house model serving infrastructure.

Dynatrace

DECEMBER 15, 2022

Besides the traditional system hardware, storage, routers, and software, ITOps also includes virtual components of the network and cloud infrastructure. Although modern cloud systems simplify tasks, such as deploying apps and provisioning new hardware and servers, hybrid cloud and multicloud environments are often complex.

The Netflix TechBlog

NOVEMBER 9, 2021

by Liwei Guo , Ashwin Kumar Gopi Valliammal , Raymond Tam , Chris Pham , Agata Opalach , Weibo Ni AV1 is the first high-efficiency video codec format with a royalty-free license from Alliance of Open Media (AOMedia), made possible by wide-ranging industry commitment of expertise and resources.

Dynatrace

FEBRUARY 23, 2022

IaC, or software intelligence as code , codifies and manages IT infrastructure in software, rather than in hardware. According to a Gartner report, “By 2023, 60% of organizations will use infrastructure automation tools as part of their DevOps toolchains, improving application deployment efficiency by 25%.”.

Dynatrace

JUNE 10, 2024

As a result, organizations are implementing security analytics to manage risk and improve DevSecOps efficiency. Finally, observability helps organizations understand the connections between disparate software, hardware, and infrastructure resources. According to recent global research, CISOs’ security concerns are multiplying.

Dynatrace

SEPTEMBER 27, 2024

The app automatically builds baselines, important reference points for analyzing the environmental impact of individual hardware or software instances. In fact, most of the proposed optimizations for computational efficiency and improved performance will also reduce energy consumption. Let’s take some of the mystery out of it.

DZone

JANUARY 16, 2024

One such breakthrough is Software-Defined Networking (SDN), a game-changing method of network administration that adds flexibility, efficiency, and scalability. The convergence of software and networking technologies has cleared the way for ground-breaking advancements in the field of modern networking.

Dynatrace

AUGUST 8, 2024

These can be caused by hardware failures, or configuration errors, or external factors like cable cuts. This approach minimizes the impact of outages on end users and maximizes the efficiency of IT remediation efforts. A comprehensive DR plan with consistent testing is also critical to ensure that large recoveries work as expected.

The Netflix TechBlog

MARCH 8, 2021

They need specialized hardware, access to petabytes of images, and digital content creation applications with controlled licenses. Where we can gather and analyze the usage data to create efficiencies and automation. As an engineer, I can work anywhere with a standard laptop as long as I have an IDE and access to Stack Overflow.

Dynatrace

APRIL 21, 2023

State and local agencies must spend taxpayer dollars efficiently while building a culture that supports innovation and productivity. APM helps ensure that citizens experience strong application reliability and performance efficiency. million annually through retiring legacy technology debt and tool rationalization.

Dynatrace

NOVEMBER 1, 2022

Most IT incident management systems use some form of the following metrics to handle incidents efficiently and maintain uninterrupted service for optimal customer experience. It shows how efficiently your DevOps team is at quickly diagnosing a problem and implementing a fix. What are MTTD, MTTA, MTTF, and MTBF? Mean time to detect.

Percona

NOVEMBER 9, 2023

Kubernetes can be complex, which is why we offer comprehensive training that equips you and your team with the expertise and skills to manage database configurations, implement industry best practices, and carry out efficient backup and recovery procedures.

Dynatrace

MARCH 28, 2024

While generative AI has received much of the attention since 2022 for enabling innovation and efficiency, various forms of AI—generative, causal **, and predictive AI —will work together to automate processes, introduce innovation, and other activities in service of digital transformation.

Dynatrace

SEPTEMBER 30, 2021

Like any move, a cloud migration requires a lot of planning and preparation, but it also has the potential to transform the scope, scale, and efficiency of how you deliver value to your customers. This can fundamentally transform how they work, make processes more efficient, and improve the overall customer experience. Here are three.

Dynatrace

MAY 1, 2023

In addition to improved IT operational efficiency at a lower cost, ITOA also enhances digital experience monitoring for increased customer engagement and satisfaction. Additionally, ITOA gathers and processes information from applications, services, networks, operating systems, and cloud infrastructure hardware logs in real time.

DZone

NOVEMBER 10, 2023

Hyper-V: Enabling Cloud Virtualization Hyper-V serves as a fundamental component in cloud computing environments, enabling efficient and flexible virtualization of resources. By leveraging Hyper-V, cloud service providers can optimize hardware utilization by running multiple virtual machines (VMs) on a single physical server.

VoltDB

DECEMBER 11, 2024

Use hardware-based encryption and ensure regular over-the-air updates to maintain device security. As data streams grow in complexity, processing efficiency can decline. Solution: Optimize edge workloads by deploying lightweight algorithms tailored for edge hardware. Balancing efficiency with carbon footprint reduction goals.

Dynatrace

AUGUST 26, 2020

There’s no other competing software that can provide this level of value with minimum effort and optimal hardware utilization that can scale up to web-scale! I’d like to stress the lean approach to hardware that our customers require for running Dynatrace Managed. Support for high memory instances. Impact on disk space.

Dynatrace

OCTOBER 7, 2021

Instead of worrying about infrastructure management functions, such as capacity provisioning and hardware maintenance, teams can focus on application design, deployment, and delivery. Serverless architecture offers several benefits for enterprises. Simplicity. The first benefit is simplicity. AWS serverless offerings.

The Netflix TechBlog

FEBRUARY 5, 2020

AV1 is a high performance, royalty-free video codec that provides 20% improved compression efficiency over our VP9† encodes. Our support for AV1 represents Netflix’s continued investment in delivering the most efficient and highest quality video streams. AV1-libaom compression efficiency as measured against VP9-libvpx.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content