Data Mesh?—?A Data Movement and Processing Platform @ Netflix

The Netflix TechBlog

AUGUST 1, 2022

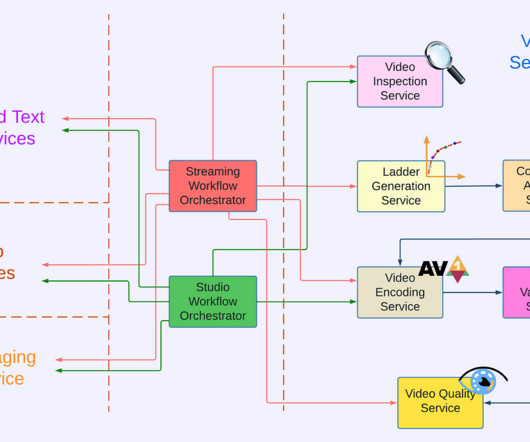

A Data Movement and Processing Platform @ Netflix By Bo Lei , Guilherme Pires , James Shao , Kasturi Chatterjee , Sujay Jain , Vlad Sydorenko Background Realtime processing technologies (A.K.A stream processing) is one of the key factors that enable Netflix to maintain its leading position in the competition of entertaining our users.

Let's personalize your content