Batch vs. Real-Time Processing: Understanding the Differences

DZone

AUGUST 8, 2024

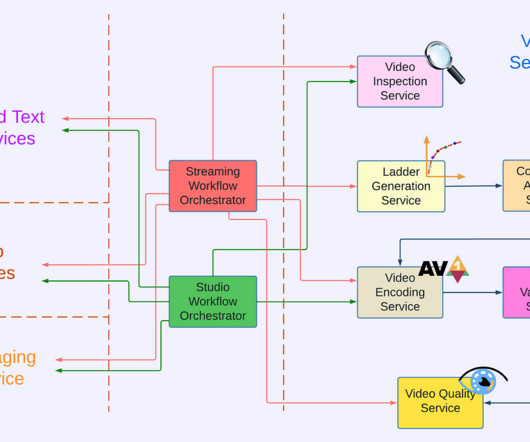

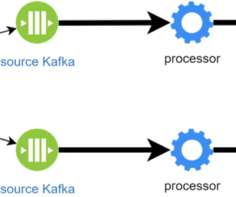

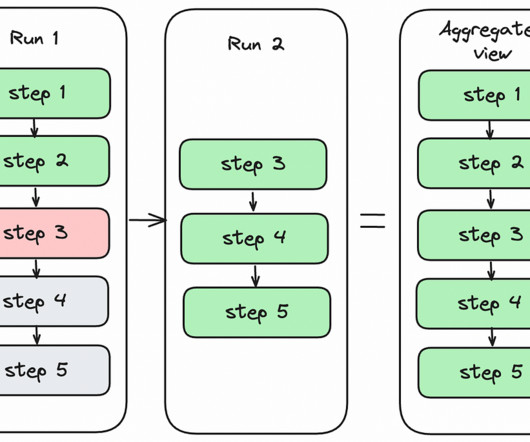

The decision between batch and real-time processing is a critical one, shaping the design, architecture, and success of our data pipelines. Understanding the key distinctions between these two processing paradigms is crucial for organizations to make informed decisions and harness the full potential of their data.

Let's personalize your content