Balancing Low Latency, High Availability, and Cloud Choice

VoltDB

MAY 14, 2024

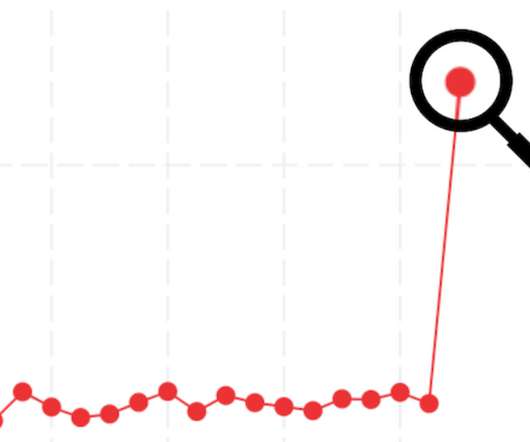

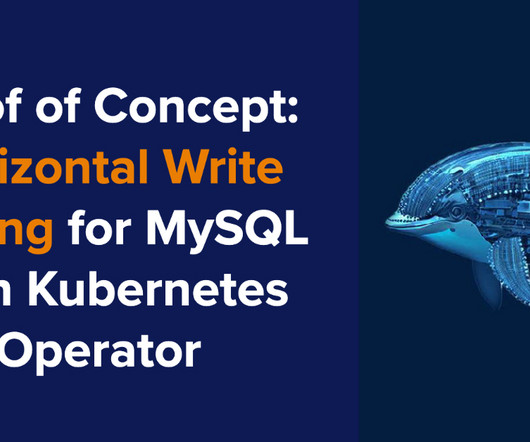

Balancing Low Latency, High Availability and Cloud Choice Cloud hosting is no longer just an option — it’s now, in many cases, the default choice. While efficient on paper, scaling-related issues usually pop up when testing moves into production. But the cloud computing market, having grown to a whopping $483.9 Why are they refusing?

Let's personalize your content