Why Replace External Database Caches?

DZone

AUGUST 28, 2024

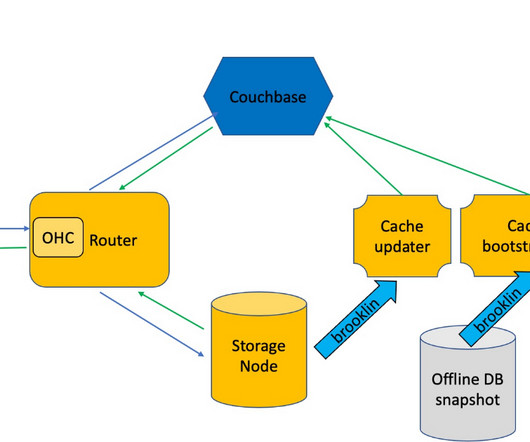

Teams often consider external caches when the existing database cannot meet the required service-level agreement (SLA). However, external caches are not as simple as they are often made out to be. This is a clear performance-oriented decision.

Let's personalize your content