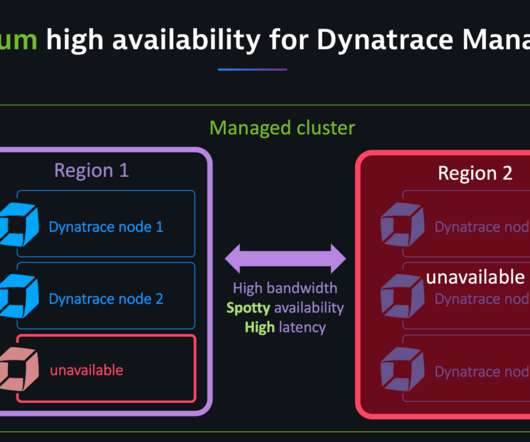

Storage handling improvements increase retention of transaction data for Dynatrace Managed

Dynatrace

JULY 29, 2021

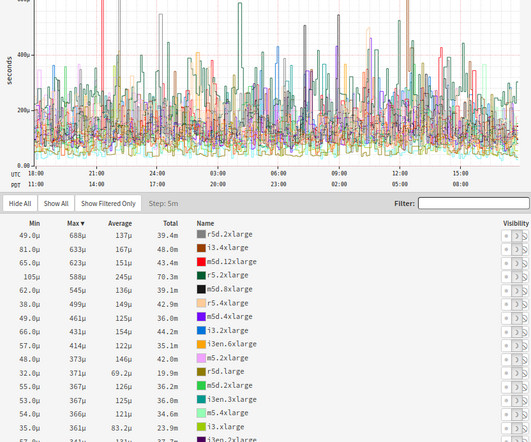

Using existing storage resources optimally is key to being able to capture the right data over time. Dynatrace stores transaction data (for example, PurePaths and code-level traces) on disk for 10 days by default. Increased storage space availability. Improvements to Adaptive Data Retention.

Let's personalize your content