Efficient Multimodal Data Processing: A Technical Deep Dive

DZone

FEBRUARY 27, 2025

Handling multimodal data spanning text, images, videos, and sensor inputs requires resilient architecture to manage the diversity of formats and scale.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

DZone

FEBRUARY 27, 2025

Handling multimodal data spanning text, images, videos, and sensor inputs requires resilient architecture to manage the diversity of formats and scale.

DZone

FEBRUARY 14, 2025

In the realm of modern software architecture, middleware plays a pivotal role in connecting various components of distributed systems. Efficient database operations in middleware can dramatically improve overall system performance, reduce latency, and enhance user experience.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

DZone

FEBRUARY 26, 2025

One such open-source, distributed search and analytics engine is Elasticsearch, which is very efficient at handling data in large sets and high-velocity queries. However, the process for effectively scaling Elasticsearch can be nuanced, since one needs a proper understanding of the architecture behind it and of performance tradeoffs.

Scalegrid

FEBRUARY 6, 2025

This article outlines the key differences in architecture, performance, and use cases to help determine the best fit for your workload. RabbitMQ follows a message broker model with advanced routing, while Kafkas event streaming architecture uses partitioned logs for distributed processing. What is RabbitMQ? What is Apache Kafka?

The Netflix TechBlog

NOVEMBER 12, 2024

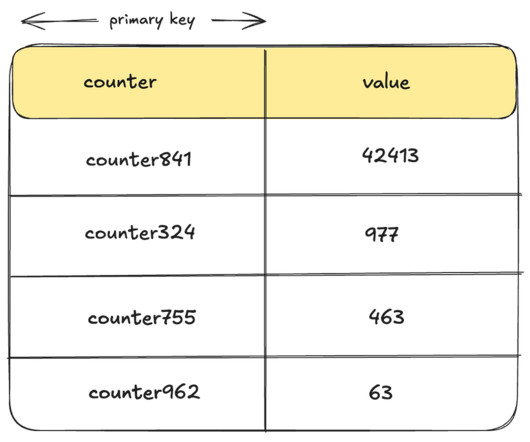

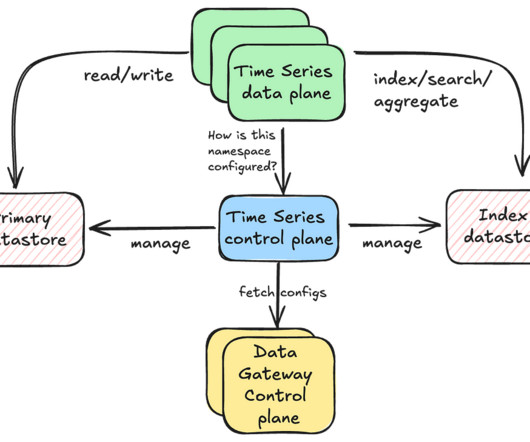

By: Rajiv Shringi , Oleksii Tkachuk , Kartik Sathyanarayanan Introduction In our previous blog post, we introduced Netflix’s TimeSeries Abstraction , a distributed service designed to store and query large volumes of temporal event data with low millisecond latencies. Today, we’re excited to present the Distributed Counter Abstraction.

DZone

FEBRUARY 27, 2024

Leveraging this hierarchical structure can significantly reduce latency and improve overall performance.

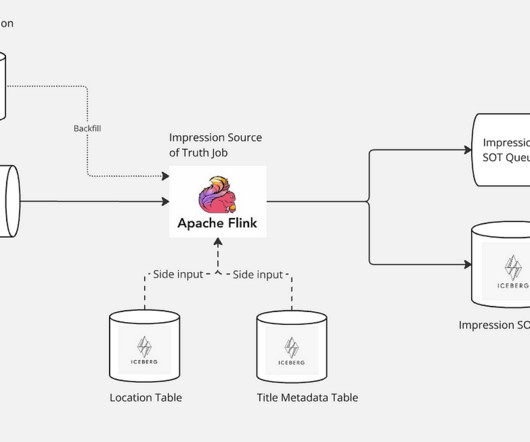

The Netflix TechBlog

FEBRUARY 14, 2025

Architecture Overview The first pivotal step in managing impressions begins with the creation of a Source-of-Truth (SOT) dataset. Impression Source-of-Truth architecture Ensuring High Quality Impressions Maintaining the highest quality of impressions is a top priority.

Dynatrace

JANUARY 26, 2021

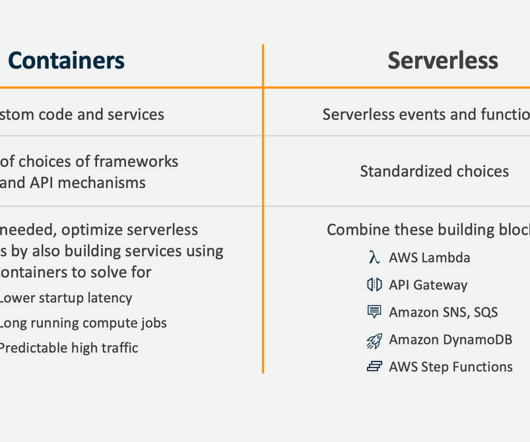

This allows teams to sidestep much of the cost and time associated with managing hardware, platforms, and operating systems on-premises, while also gaining the flexibility to scale rapidly and efficiently. In a serverless architecture, applications are distributed to meet demand and scale requirements efficiently.

Scalegrid

FEBRUARY 24, 2025

This guide will cover how to distribute workloads across multiple nodes, set up efficient clustering, and implement robust load-balancing techniques. This decoupling is crucial in modern architectures where scalability and fault tolerance are paramount.

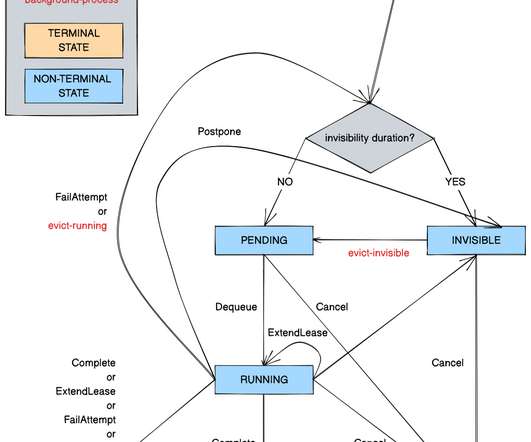

The Netflix TechBlog

SEPTEMBER 29, 2022

Timestone: Netflix’s High-Throughput, Low-Latency Priority Queueing System with Built-in Support for Non-Parallelizable Workloads by Kostas Christidis Introduction Timestone is a high-throughput, low-latency priority queueing system we built in-house to support the needs of Cosmos , our media encoding platform. Over the past 2.5

The Netflix TechBlog

SEPTEMBER 18, 2024

These include challenges with tail latency and idempotency, managing “wide” partitions with many rows, handling single large “fat” columns, and slow response pagination. Data Model At its core, the KV abstraction is built around a two-level map architecture.

Dynatrace

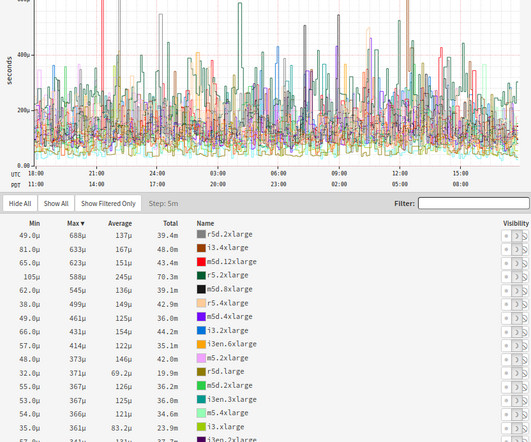

JANUARY 14, 2022

The following figure shows the high-level architecture where any load testing solution (e.g. The optimization goal was to improve the application efficiency, that is to improve the ratio between service throughput and cloud costs while not increasing the application latency (e.g. below 500ms) and error rates (e.g.

The Netflix TechBlog

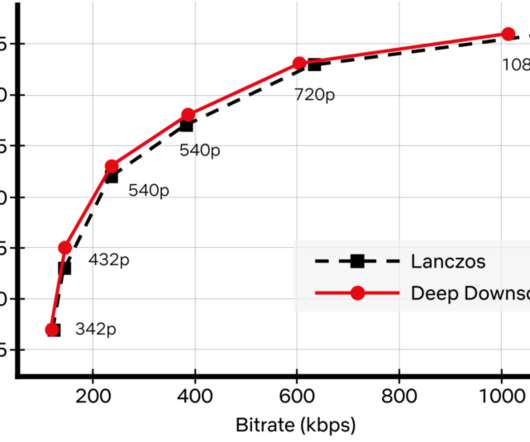

NOVEMBER 17, 2022

While conventional video codecs remain prevalent, NN-based video encoding tools are flourishing and closing the performance gap in terms of compression efficiency. Architecture of the deep downscaler model, consisting of a preprocessing block followed by a resizing block. How do we apply neural networks at scale efficiently?

The Netflix TechBlog

OCTOBER 8, 2024

Rajiv Shringi Vinay Chella Kaidan Fullerton Oleksii Tkachuk Joey Lynch Introduction As Netflix continues to expand and diversify into various sectors like Video on Demand and Gaming , the ability to ingest and store vast amounts of temporal data — often reaching petabytes — with millisecond access latency has become increasingly vital.

The Netflix TechBlog

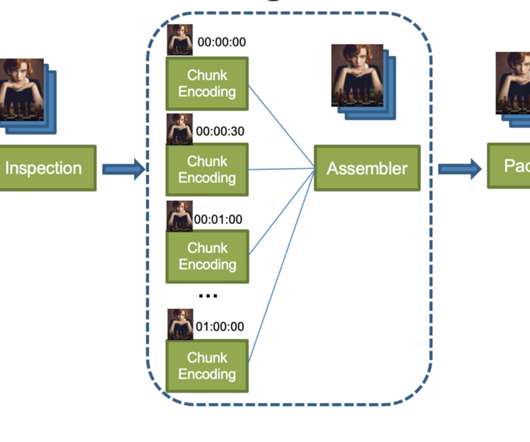

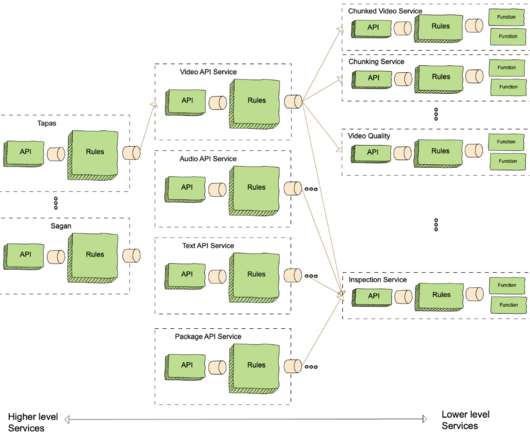

SEPTEMBER 24, 2021

Table 1: Movie and File Size Examples Initial Architecture A simplified view of our initial cloud video processing pipeline is illustrated in the following diagram. Figure 1: A Simplified Video Processing Pipeline With this architecture, chunk encoding is very efficient and processed in distributed cloud computing instances.

Dynatrace

SEPTEMBER 13, 2023

This is a set of best practices and guidelines that help you design and operate reliable, secure, efficient, cost-effective, and sustainable systems in the cloud. The framework comprises six pillars: Operational Excellence, Security, Reliability, Performance Efficiency, Cost Optimization, and Sustainability.

Dynatrace

FEBRUARY 4, 2021

As a discipline, SRE focuses on improving software system reliability across key categories including availability, performance, latency, efficiency, capacity, and incident response. ” According to Google, “SRE is what you get when you treat operations as a software problem.” Solving for SR.

Dynatrace

MAY 23, 2024

Stream processing One approach to such a challenging scenario is stream processing, a computing paradigm and software architectural style for data-intensive software systems that emerged to cope with requirements for near real-time processing of massive amounts of data. This significantly increases event latency.

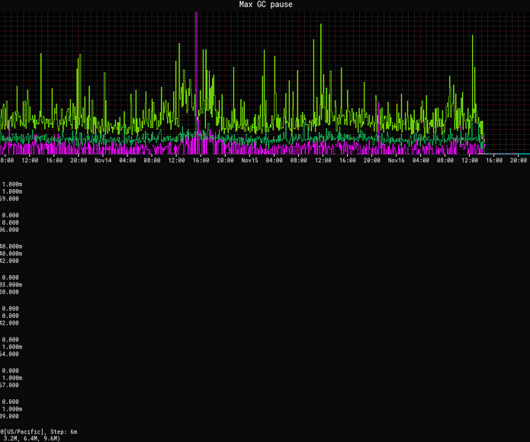

The Netflix TechBlog

MARCH 5, 2024

Reduced tail latencies In both our GRPC and DGS Framework services, GC pauses are a significant source of tail latencies. In fact, we’ve found for our services and architecture that there is no such trade off. We considered that an acceptable trade off, as avoiding pauses provided benefits that would outweigh that overhead.

Dynatrace

OCTOBER 4, 2022

While data lakes and data warehousing architectures are commonly used modes for storing and analyzing data, a data lakehouse is an efficient third way to store and analyze data that unifies the two architectures while preserving the benefits of both. Data lakehouses deliver the query response with minimal latency.

Dynatrace

FEBRUARY 4, 2021

As a discipline, SRE focuses on improving software system reliability across key categories including availability, performance, latency, efficiency, capacity, and incident response. ” According to Google, “SRE is what you get when you treat operations as a software problem.” Solving for SR.

Dynatrace

JANUARY 31, 2024

Retrieval-augmented generation emerges as the standard architecture for LLM-based applications Given that LLMs can generate factually incorrect or nonsensical responses, retrieval-augmented generation (RAG) has emerged as an industry standard for building GenAI applications.

Dynatrace

OCTOBER 1, 2021

As dynamic systems architectures increase in complexity and scale, IT teams face mounting pressure to track and respond to conditions and issues across their multi-cloud environments. An advanced observability solution can also be used to automate more processes, increasing efficiency and innovation among Ops and Apps teams.

Scalegrid

OCTOBER 17, 2019

Moving to a multithreaded architecture will require extensive rewrites. But that causes a problem with PostgreSQL’s architecture – forking a process becomes expensive when transactions are very short, as the common wisdom dictates they should be. The PostgreSQL Architecture | Source. The Connection Pool Architecture.

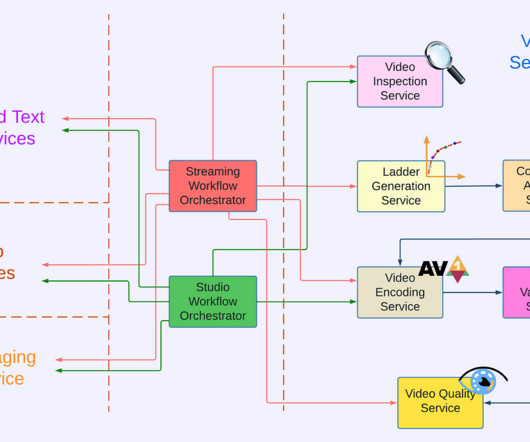

The Netflix TechBlog

JANUARY 10, 2024

This architecture shift greatly reduced the processing latency and increased system resiliency. We expanded pipeline support to serve our studio/content-development use cases, which had different latency and resiliency requirements as compared to the traditional streaming use case.

Dynatrace

APRIL 7, 2023

Dynatrace is a launch partner in support of AWS Lambda Response Streaming , a new capability enabling customers to improve the efficiency and performance of their Lambda functions. Customers can use AWS Lambda Response Streaming to improve performance for latency-sensitive applications and return larger payload sizes.

Dynatrace

APRIL 5, 2021

The 2014 launch of AWS Lambda marked a milestone in how organizations use cloud services to deliver their applications more efficiently, by running functions at the edge of the cloud without the cost and operational overhead of on-premises servers. AWS continues to improve how it handles latency issues. Dynatrace news.

Dynatrace

APRIL 8, 2024

This blog explores how vertically integrated risk management solutions that use AI and automation enable unparalleled visibility, control, and efficiency for risk management in banking. They can accomplish this all while delivering transformation efficiency and economies of scale for IT functions that maintain risk management infrastructure.

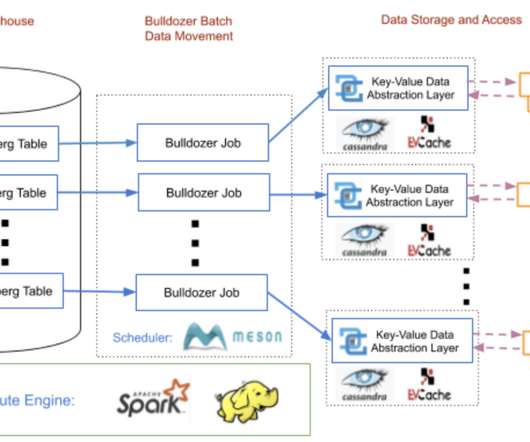

The Netflix TechBlog

OCTOBER 27, 2020

At Netflix, we also heavily embrace a microservice architecture that emphasizes separation of concerns. The data warehouse is not designed to serve point requests from microservices with low latency. Therefore, we must efficiently move data from the data warehouse to a global, low-latency and highly-reliable key-value store.

The Netflix TechBlog

MARCH 1, 2021

It supports both high throughput services that consume hundreds of thousands of CPUs at a time, and latency-sensitive workloads where humans are waiting for the results of a computation. The subsystems all communicate with each other asynchronously via Timestone, a high-scale, low-latency priority queuing system.

The Netflix TechBlog

SEPTEMBER 2, 2020

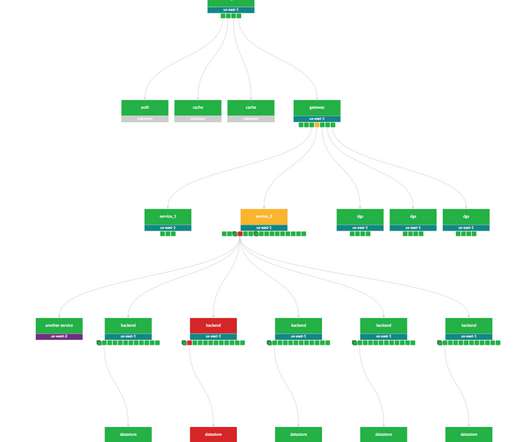

Edgar helps Netflix teams troubleshoot distributed systems efficiently with the help of a summarized presentation of request tracing, logs, analysis, and metadata. While this abundance of dashboards and information is by no means unique to Netflix, it certainly holds true within our microservices architecture. What is Edgar?

The Netflix TechBlog

JUNE 4, 2019

Because microprocessors are so fast, computer architecture design has evolved towards adding various levels of caching between compute units and the main memory, in order to hide the latency of bringing the bits to the brains. This avoids thrashing caches too much for B and evens out the pressure on the L3 caches of the machine.

Dynatrace

MARCH 29, 2024

.” While Kubernetes’ usability and ubiquity make it the ideal environment for cloud-based production tasks, operational oversight and resource management challenges can frustrate DevOps efforts to drive efficiency. You can ask for the best configuration to reduce latency or improve the user experience.”

The Netflix TechBlog

SEPTEMBER 8, 2020

We tried a few iterations of what this new service should look like, and eventually settled on a modern architecture that aimed to give more control of the API experience to the client teams. For us, it means that we now need to have ~15 MDN tabs open when writing routes :) Let’s briefly discuss the architecture of this microservice.

VoltDB

DECEMBER 11, 2024

By bringing computation closer to the data source, edge-based deployments reduce latency, enhance real-time capabilities, and optimize network bandwidth. As data streams grow in complexity, processing efficiency can decline. Increased latency during peak loads. Balancing efficiency with carbon footprint reduction goals.

High Scalability

SEPTEMBER 8, 2018

RISELabs , those wonderfully innovative folks over at Berkeley, have uplifted their Anna datatabase —a shared-nothing, thread-per-core architecture to achieve lightning-fast speeds by avoiding all coordination mechanisms—to become cloud-aware. Our experiments show an impressive level of both performance and cost efficiency.

Dynatrace

JULY 18, 2023

This is especially crucial in microservice architectures, where the number of components can be overwhelming. However, scaling up software development requires more tools along the software product lifecycle, which must be configured promptly and efficiently.

Percona

NOVEMBER 9, 2023

Kubernetes can be complex, which is why we offer comprehensive training that equips you and your team with the expertise and skills to manage database configurations, implement industry best practices, and carry out efficient backup and recovery procedures.

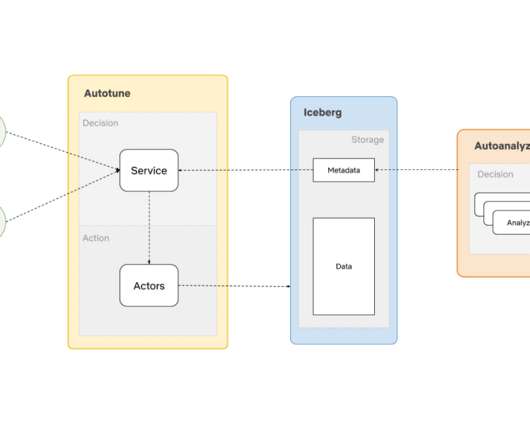

The Netflix TechBlog

DECEMBER 21, 2020

We built AutoOptimize to efficiently and transparently optimize the data and metadata storage layout while maximizing their cost and performance benefits. This article will list some of the use cases of AutoOptimize, discuss the design principles that help enhance efficiency, and present the high-level architecture.

The Netflix TechBlog

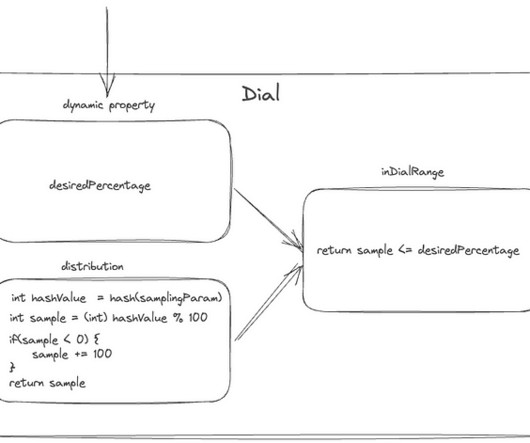

JULY 12, 2019

The net result is, for many datasets, vastly more efficient use of RAM. and can achieve orders of magnitude more efficient data access, which opens up many possibilities. Old Gatekeeper Architecture This model had several problems associated with it: This process was completely I/O bound and put a lot of load on upstream systems.

All Things Distributed

JULY 20, 2015

Today, I want to explore the Amazon ECS architecture and what this architecture enables. This architecture affords Amazon ECS high availability, low latency, and high throughput because the data store is never pessimistically locked. Below is a diagram of the basic components of Amazon ECS: How we coordinate the cluster.

The Netflix TechBlog

JUNE 13, 2023

By collecting and analyzing key performance metrics of the service over time, we can assess the impact of the new changes and determine if they meet the availability, latency, and performance requirements. A/B testing is also a key technique in migrations where the updates to the architecture involve changing device contracts as well.

Dynatrace

DECEMBER 15, 2022

This includes response time, accuracy, speed, throughput, uptime, CPU utilization, and latency. Adding application security to development and operations workflows increases efficiency. This is the number of failures that affect users’ ability to use an application by the total time in service. Performance.

Adrian Cockcroft

MAY 6, 2023

I don’t advocate “Serverless Only”, and I recommended that if you need sustained high traffic, low latency and higher efficiency, then you should re-implement your rapid prototype as a continuously running autoscaled container, as part of a larger serverless event driven architecture, which is what they did.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content