Rebuilding Netflix Video Processing Pipeline with Microservices

The Netflix TechBlog

JANUARY 10, 2024

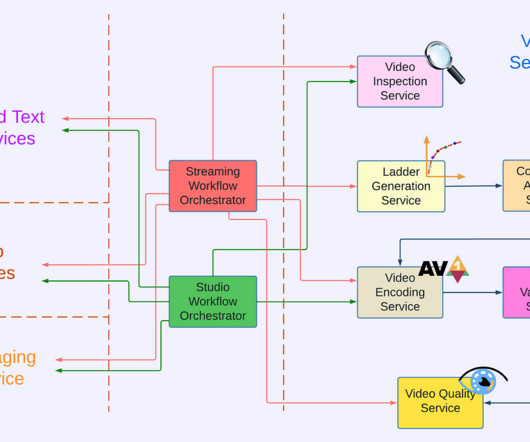

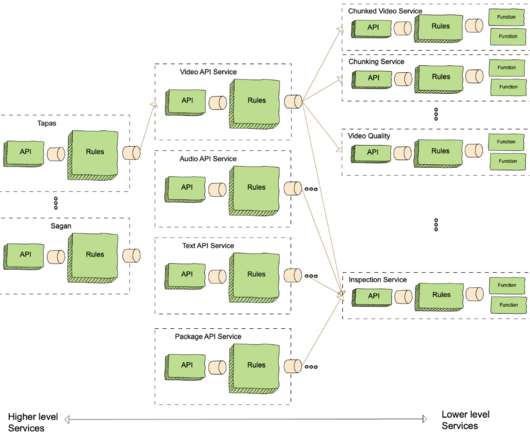

The Netflix video processing pipeline went live with the launch of our streaming service in 2007. This architecture shift greatly reduced the processing latency and increased system resiliency. The service also provides options that allow fine-tuning latency, throughput, etc., divide the input video into small chunks 2.

Let's personalize your content